Dear Amped friends, today we’re sharing with you something big. If you’ve been following us, then you know that Amped invests lots of resources into research and testing. We also join forces with several universities to be on the cutting edge of image and video forensics. During one of these research ventures with the University of Florence (Italy), we discovered something important regarding PRNU-based source camera identification.

PRNU-based source camera identification has been, for years, considered one of the most reliable image forensics technologies. Given a suitable number of images from a camera, you can use them to estimate the sensor’s characteristic noise (we call it Camera Reference Pattern, CRP). Then, you can compare the CRP against a questioned image to understand whether it was captured by that specific exemplar. You can read more about PRNU here.

Since its beginnings, the real strength of PRNU-based source camera identification was that false positives were extremely rare. It is shown in widely acknowledged scientific papers. The uniqueness of the sensor fingerprint was so strong that researchers were even able to cluster images based on their source device. They compared the residual noise extracted from single images, in a one-vs-one fashion. We tested this one-vs-one approach over the VISION dataset, which is composed of images captured with 35 portable devices (released roughly between 2010 and 2015), and actually, it worked.

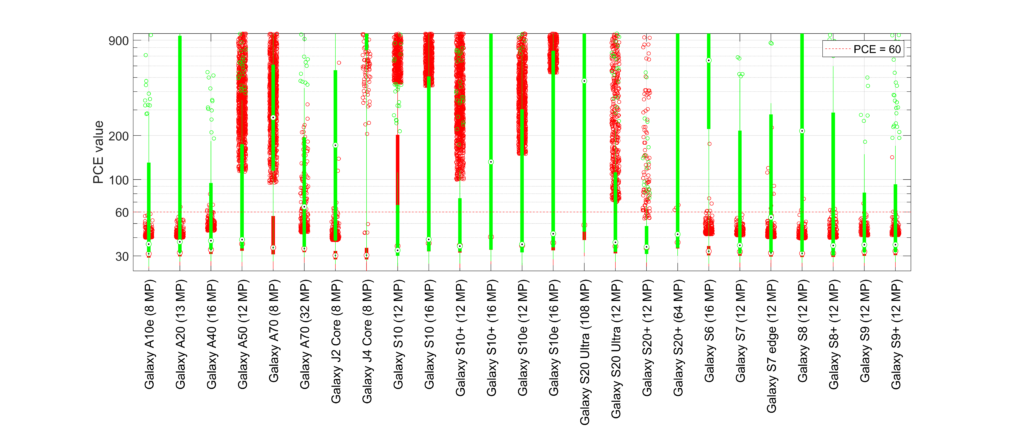

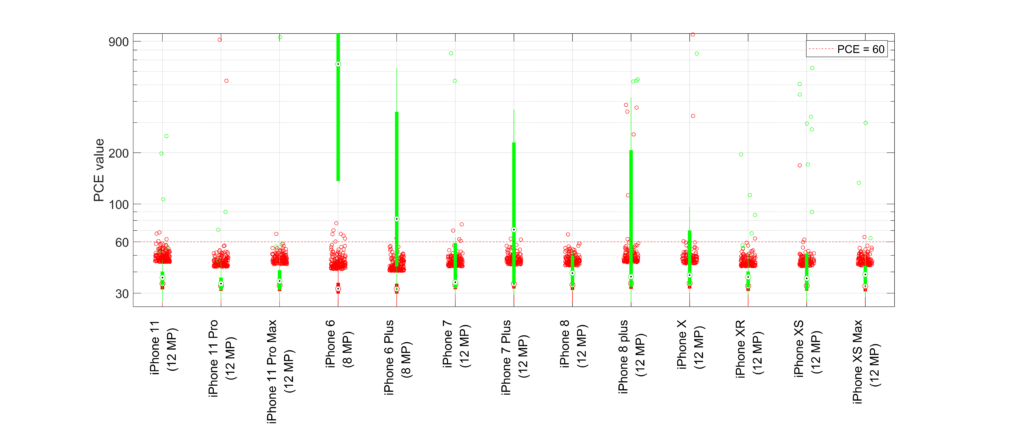

Take a look at the boxplot below. On the X-axis you have the 35 different devices in the VISION dataset (click here to see the list). For each device, the vertical green box shows the PCE values obtained comparing couples of images captured by the device itself (the thick box covers values from the 25th to the 75th percentiles, the circled black dot is the median value, isolated circles are “outlier” values). Red boxes and circles represent the PCE values obtained comparing images of the device against images of other devices.

As expected, for most devices the green boxes lay well above the dashed horizontal line sitting on 60, which is the PCE threshold commonly used to claim a positive match. Most noticeably, we have no red circles staying well above the PCE threshold. Yes, there are some here and there sporadically. But they’re still at values below 100, so we can call these “weak false positives”.

But with all the computations that happen inside modern devices, is PRNU still equally reliable? To answer this question, we’ve been downloading thousands of images from the web, filtering them so to take only pictures captured with recent (2019+) smartphones. We also filtered out images having traces of editing software in their metadata. We applied several heuristic rules to exclude images that seemed to be not camera originals. For some devices, we also collected images at two of the default resolutions. We then grouped images by uploading users, assuming that different users take pictures with different exemplars and that a single user only owns one exemplar. Now, take a look at what happened when we tested Samsung smartphones.

If you are in the field, you know the plot above is quite disturbing. It shows that starting from Galaxy S10 an impressive amount of false positives are found. Okay, it may be that someone has two different accounts and uploads images with the same exemplar to both of them. But that is not enough to explain such a number of false positives.

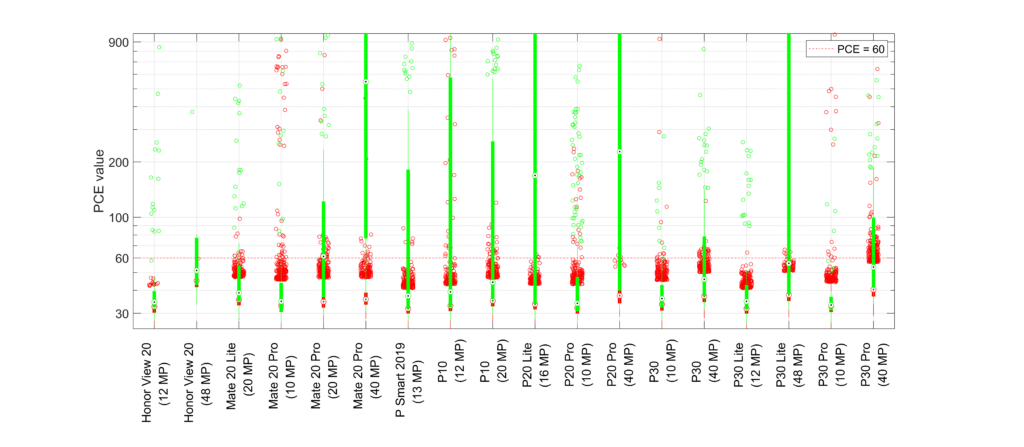

If we focus the attention on Huawei devices, we also have several false positives (although not as massively as for Samsung models).

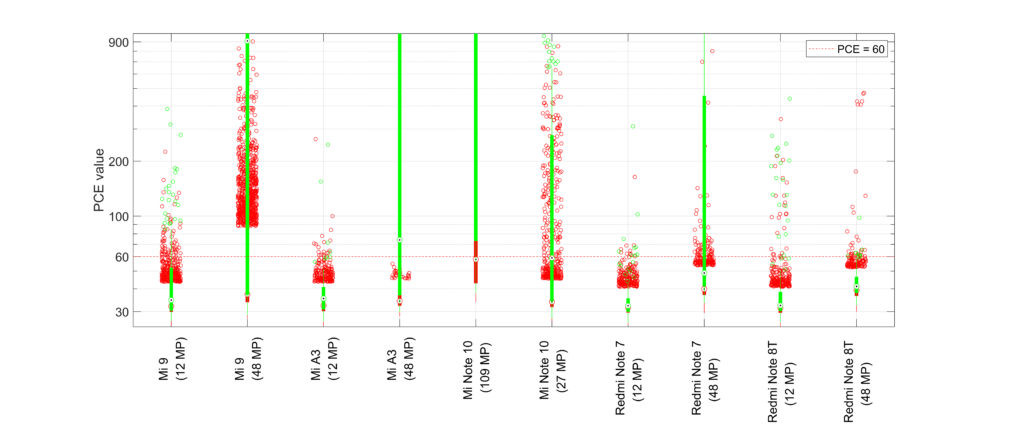

Xiaomi devices also raised the same concerns!

While Apple smartphones seem less affected by the problem.

These plots, together with a thorough explanation of what we did, are presented in a paper that we recently uploaded to ArXiv.org https://arxiv.org/abs/2009.04878 (click here to download the PDF). We are also extending our experiments to the classical fingerprint-vs-image comparison. We are including DSLR cameras in the study. The extended paper is going through peer review and will hopefully be published soon.

What does all of the above mean? Is PRNU no longer reliable? Should we stop using it altogether? No, the foundations of PRNU are still valid of course. Moreover, the tests we presented above are done comparing the noise residual extracted from single images. While PRNU is normally used comparing a CRP against a questioned image. Finally, the issues highlighted above only affect very recent devices. All of that said, there’s an urgent need to deeply investigate the reasons behind the false positives we observed.

Perhaps the internal processing applied by modern devices introduces some non-unique artifacts, which causes images from different exemplars to match. If this is the case, it could be possible to exclude such non-unique artifacts and bring PRNU back to its known performance. As of now, we recommend that when working on a case you carry out a dedicated validation, making tests with images captured with other exemplars of the same camera model (and, possibly, firmware).

Amped believes and focuses on justice through science. As soon as we understood what we were seeing, Amped and the researchers at the University of Florence decided to share all of this with the research community. We could have waited to find a final answer to the problem. But that seemed unethical to us all. We are instead publishing these preliminary discoveries, hoping they will be a call to action for the research community.

We invite you to download and read the paper, and of course, let us know if you spot something wrong! As soon as we have new updates, we’ll inform you on our blog, so keep following us.