In the last few days, there’s been a lot of noise about the latest impressive research by Google. This is a selection of articles with bombastic titles:

- The Guardian: Real life CSI: Google’s new AI system unscrambles pixelated faces

- CNET: Google just made ‘zoom and enhance’ a reality — kinda

- Ars Technica: Google Brain super-resolution image tech makes “zoom, enhance!” a real thing

The actual research article by Google is available here.

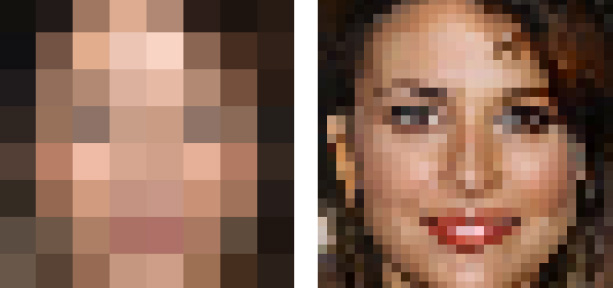

First of all, let me say that technically, the results are amazing. But this system is not simply an image enhancement or restoration tool. It is creating new images based on a best guess, which may look similar but also completely different than the actual data originally captured.

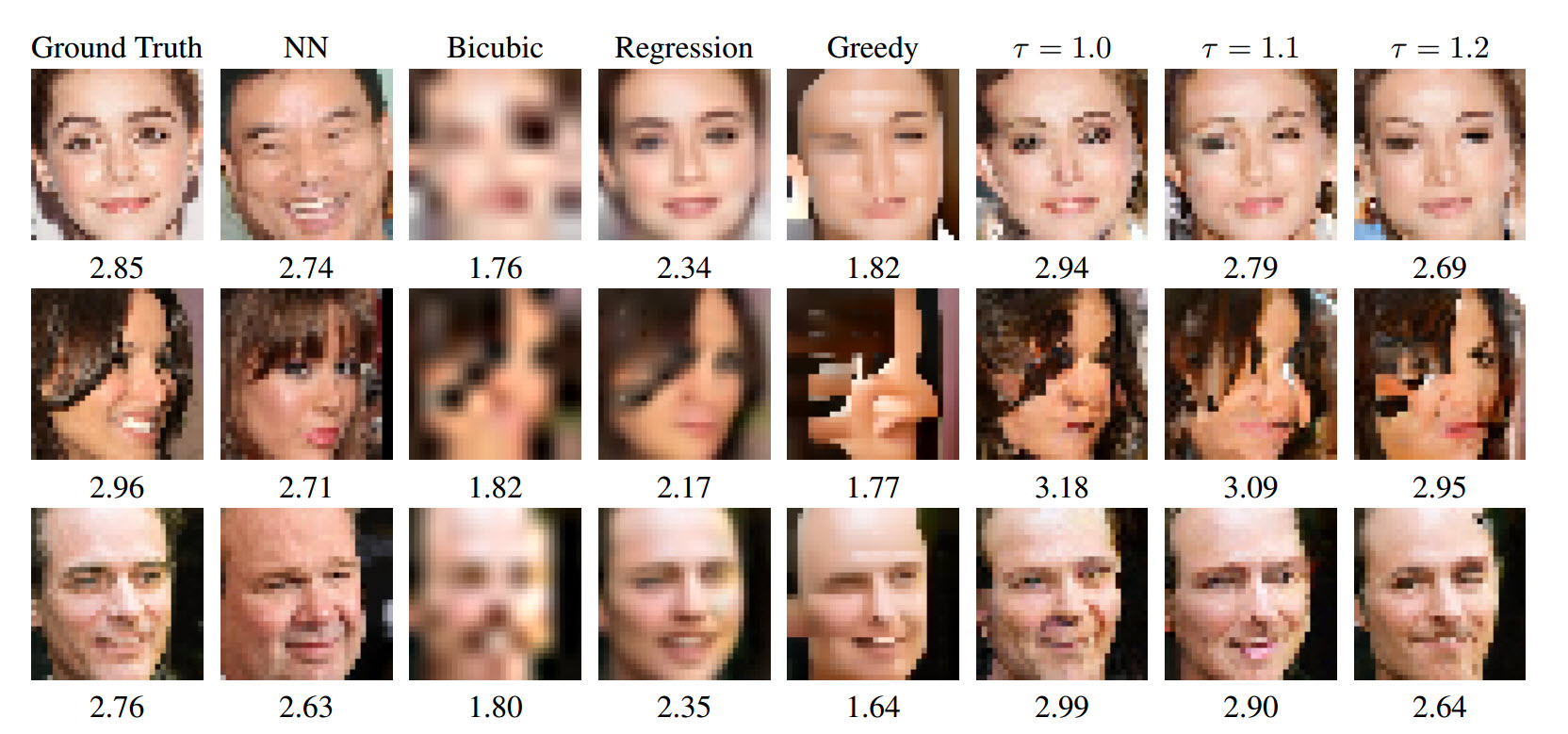

While this is probably one of the most interesting results, there have been many similar attempts in the last months, and I have already explained in a previous blog post why these systems cannot be used in forensic applications. I recommend going back to that article. But the bottom line is, all these systems rely on training datasets which will pollute the image under analysis with external data, with very concrete risks of creating images which look good (or at least better) but that are not real at all, nor related to the actual case. I am not speaking about theoric potential risks. Just look at the picture below (from Google’s paper) to see how different the generated images are from the ground truth.

My opinion is that these results shouldn’t be used for investigative purposes. It’s simply better not to have information at all than to have a wrong or misleading one. Relying on fake details can easily exculpate a guilty person or convict an innocent one.

Of course, all the above-mentioned articles try to juggle with the sensational headlines and the more technical savvy opinion, but they should make it more clear.

For example, in the article published in The Guardian: “Google’s neural networks have achieved the dream of CSI viewers everywhere: the company has revealed a new AI system capable of “enhancing” an eight-pixel square image, increasing the resolution 16-fold and effectively restoring lost data.”

This system is not “restoring” lost data! It is creating new data based on some set expectations. Lost data is lost forever!

Luckily at the end, it adds: “Of course, the system isn’t capable of magic. While it can make educated guesses based on knowledge of what faces generally look like, it sometimes won’t have enough information to redraw a face that is recognisably the same person as the original image. And sometimes it just plain screws up, creating inhuman monstrosities. Nontheless, the system works well enough to fool people around 10% of the time, for images of faces.”

Also, the article published in Ars Technica starts with some CSI promises, but at the end states: “It’s important to note that the computed super-resolution image is not real. The added details—known as “hallucinations” in image processing jargon—are a best guess and nothing more. This raises some intriguing issues, especially in the realms of surveillance and forensics. This technique could take a blurry image of a suspect and add more detail—zoom! enhance!—but it wouldn’t actually be a real photo of the suspect. It might very well help the police find the suspect, though.”

So, before you ask, no, we are not implementing this algorithm – the purpose of forensics is to serve justice through science, basing results on the application of the proper methodologies using the right data.