I have a dear old friend who is a brilliant photographer and artist. Years ago, when he was teaching at the Art Center College of Design in Pasadena, CA, he would occasionally ask me to substitute for him in class as he travelled the world to take photos. He would introduce me to the class as the person at the LAPD who authenticates digital media – the guy who inspects images for evidence of Photoshopping. Then, he’d say something to the effect that I would be judging their composites, so they’d better be good enough to fool me.

Last year, I wrote a bit about my experiences authenticating files for the City / County of Los Angeles. Today, I want to address a common misconception about authentication – proving a negative.

So many requests for authentication begin with the statement, “tell me if it’s been Photoshopped.” This request for a “blind authentication” asks the analyst to prove a negative. It’s a very tough request to fulfill.

In general, this could be obtained with a certain degree of certainty if the image is verified to be an original from a certain device, with no signs of recapture and, possibly verifying the consistency on the sensor noise pattern (PRNU).

However, it is very common nowadays to work on images that are not originals but have been shared on the web or through social media, usually multiple consecutive times. This implies that metadata and other information about the format are gone, and usually the traces of tampering – if any – have been covered by multiple steps of compression and resizing. So you know easily that the picture is not an original, but it’s very difficult to rely on pixel statistics to evaluate possible tampering at the visual level.

Here’s what the US evidence codes say about authentication (there are variations in other countries, but the basic concept holds):

- It starts with the person submitting the item. They (attorney, witness, etc.) swear / affirm that the image accurately depicts what it’s supposed to depict – that it’s a contextually accurate representation of what’s at issue.

- This process of swearing / affirming comes with a bit of jeopardy. One swears “under penalty of perjury.” Thus, the burden is on the person submitting the item to be absolutely sure the item is contextually accurate and not “Photoshopped” to change the context. If they’re proven to have committed perjury, there’s fines / fees and potentially jail time involved.

- The person submits the file to support a claim. They swear / affirm, under penalty of perjury, that the file is authentic and accurately depicts the context of the claim.

Then, someone else cries foul. Someone else claims that the file has been altered in a specific way – item(s) deleted / added – scene cropped – etc.

It’s this specific allegation of forgery that is needed to test the claims. If there is no specific claim, then one is engaged in a “blind” authentication (attempting to prove a negative).

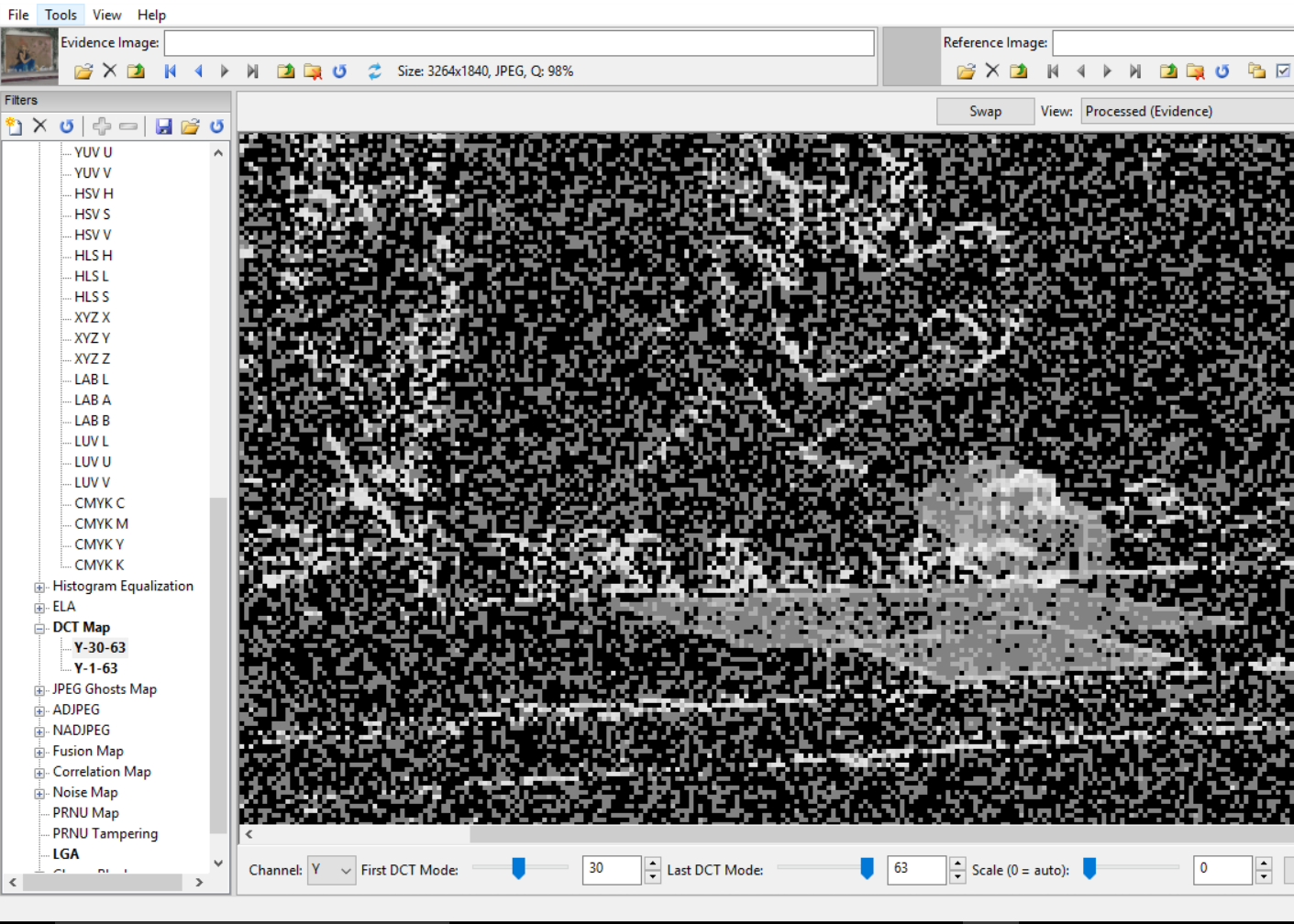

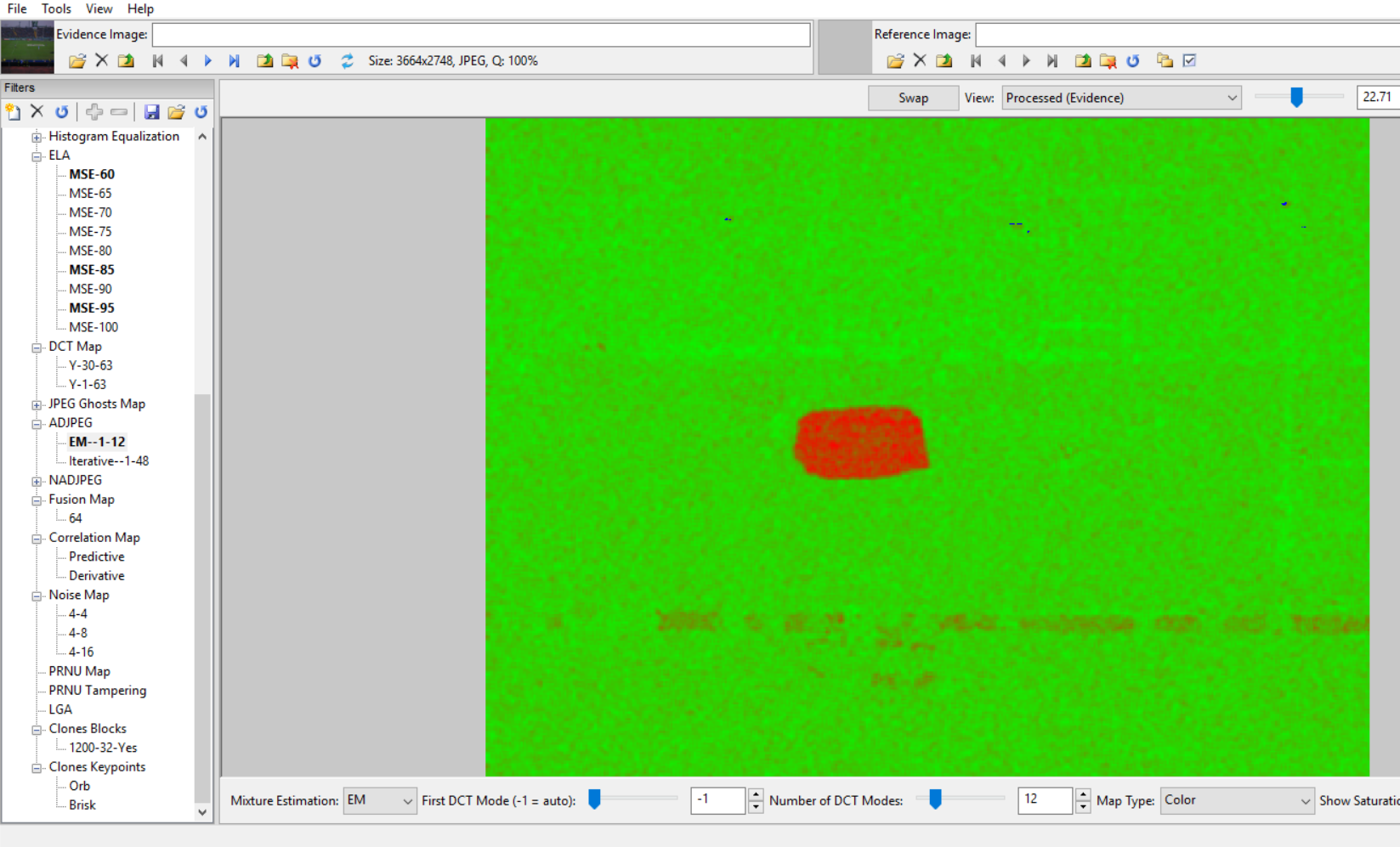

Once a specific allegation of forgery is received, the testing of the file can begin. In the case above, the allegation of an item being removed from the scene is supported by the results of several tests, including an examination of the DCT Map.

Tools like Photoshop allow the artist the ability to fill areas with a copy of data from somewhere else or with data from the background. When this happens, there will likely be differences in the compression across the image. This can often be detected with the ADJPEG / NADJPEG tests.

You see, changes in the original image are not done without some consequence. The image, when re-saved, will likely be re-compressed. This recompression will show up in the tests. The image, if cropped, will no longer match the specs of similar images taken from the same type of device. It’s really hard to commit a successful forgery.

But again, the analysis must begin with a specific and testable allegation of forgery. Once the allegation is received, the analyst chooses the appropriate tests and the work begins. Unfortunately, this is not the case in all countries. In some places, the request is to provide a clear allegation, in others, the questions are much more open and difficult to find a definitive answer for.

This goes in parallel with another topic, which is the fact that – even when not questioned – a verification of the images and videos you are working on must be done as the first step. Before even starting the analysis, you must realize if you are working on an original – the best version of the image available, or something already processed by someone in an improper way or converted with some lossy compression. It’s your duty as an image / video analyst to be sure to get the best possible result and to respect the chain of custody.

Amped Authenticate, with its over 3 dozen filters and tools, is the most comprehensive and complete toolset on the market today. Authenticate provides a suite of powerful tools to determine whether an image is an unaltered original, an original generated by a specific device, or the result of a manipulation with a photo editing software and thus may not be accepted as evidence. Competing products are focused on one or a relative few tools. Authenticate puts the power of multiple tests, procedures, and reporting in one package to improve the user’s ability to detect tampered images or determine originality.

If you’d like to know more about our tools and training options, contact us today.