Things are getting awful. Last Friday’s events brought us many deaths and injured people in Paris. Our prayers are with the families of the victims and the population of France. The situation in Paris is sorrowful. Our colleague Matthew is traveling today to Paris for Milipol. Despite the situation, the organizers confirmed that the event will take place in any case, with additional security measures.

Being at the heart of Europe, all the media coverage these days is about Paris, but we must not forget that this is, sadly, just one of the very unfortunate events that are happening. The same day, similar events killed 26 people and injured more than 60 in Baghdad, and the day before, at least 43 were killed and more than 200 injured in Beirut. And let’s not forget the massacre in Nigeria which killed 2000 people at the beginning of the year.

A problem of credibility

Unfortunately, with the current times, it is becoming easier to become a journalist and more difficult to become a good journalist. There has been a lot of information in the news that is reported to be wrong. Anti-spoof websites are uncovering the truth. But who’s checking the correctness of these sources (and of the spoof-hunters)? Very often at the center of the discussion there are pictures or videos.

As you know, we have the technologies to evaluate the authenticity of a picture. But there are situations where there is little technology involved, and we must rely mostly on open source investigations.

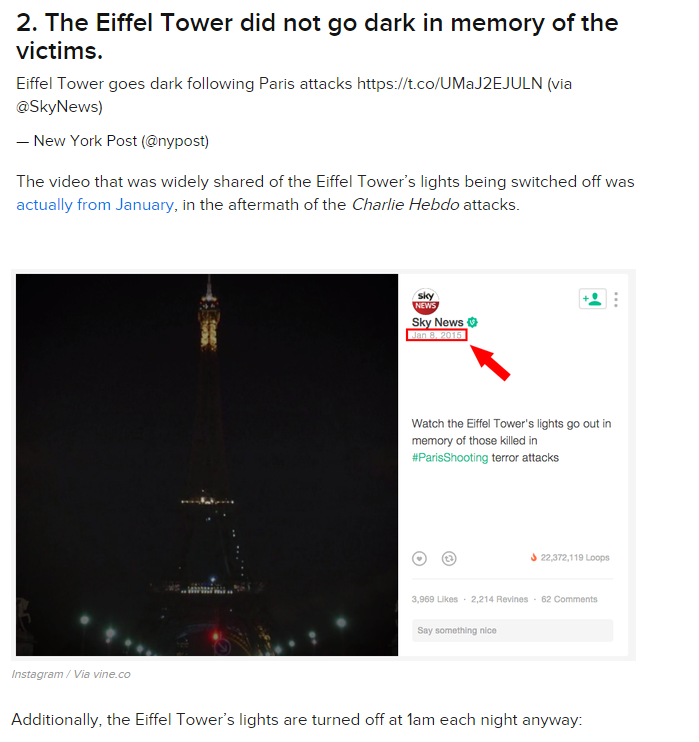

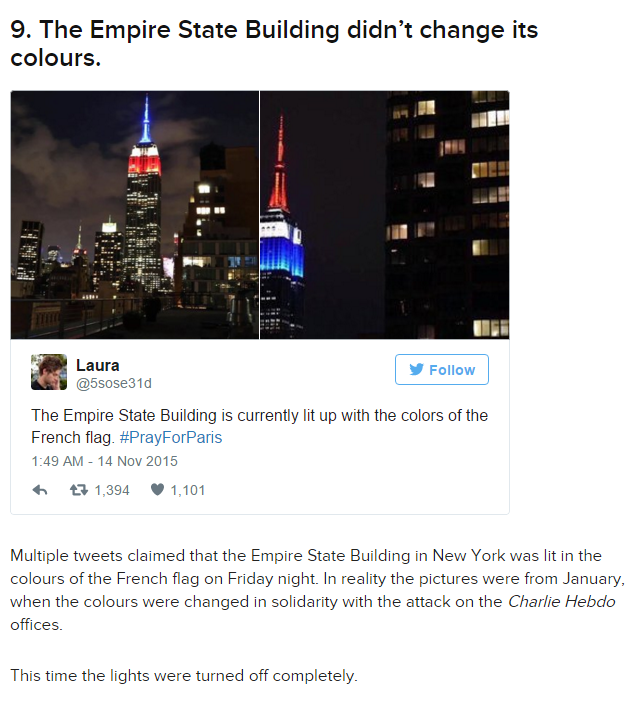

Here you can find a lot of rumors that have been published by newspapers, both printed and online, that have been debunked.

These are three of the most interesting “false facts” (just go to the Tom Phillips BuzzFeed to get the source and see more).

Can you see the common path here?

The problem is not the picture, but how it’s used

A picture is used to convey a message. Whenever the message transmitted by the picture is true, we say that the picture is authentic. In these cases, the problem is not the picture itself, but the context in which it has been placed. The pictures are fine, but they have been labeled with an erroneous attribution, while they were related to other events. So, even if you do a strict analysis on the image itself, this turns out to be mostly useless. In this case, a better way to investigate is to start searching on the Internet for similar or older versions of the pictures in another context. This can be easily done with reverse image search tools like TineEye or Google Images (also directly accessible within Amped Authenticate).

Not a funny joke

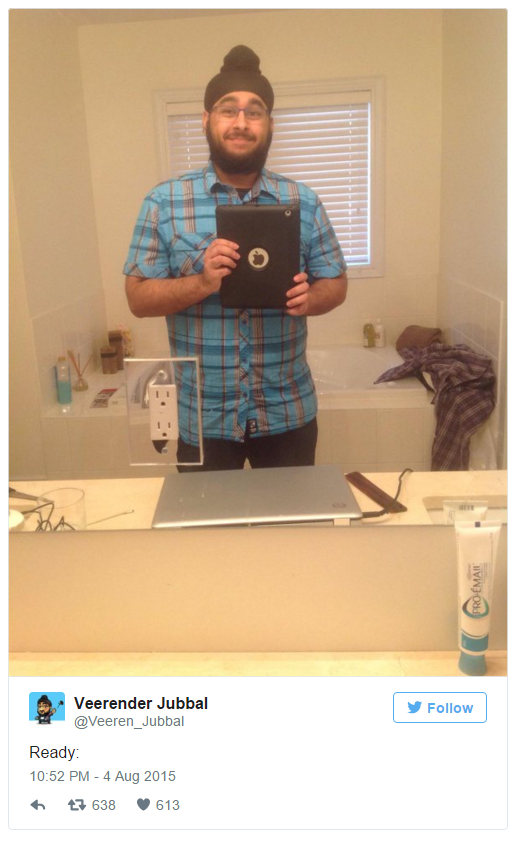

But of all the fakes going around on the net, this is probably the worst (source):

An image began to circulate on social media Saturday afternoon claiming to show one of the Paris attackers wearing a suicide bomb vest.

The image was even shared by one of the largest — though unofficial — pro-ISIS channels on Telegram, the app that the extremist group used to take credit for the attacks in Paris.

This is the original picture.

The Italian television did not play well in this case 😉

We haven’t been able to find the “original fake picture” uploaded on Twitter, yet, since the tweet has been deleted and only further resaved versions are around. It would be nice to have it to analyze.

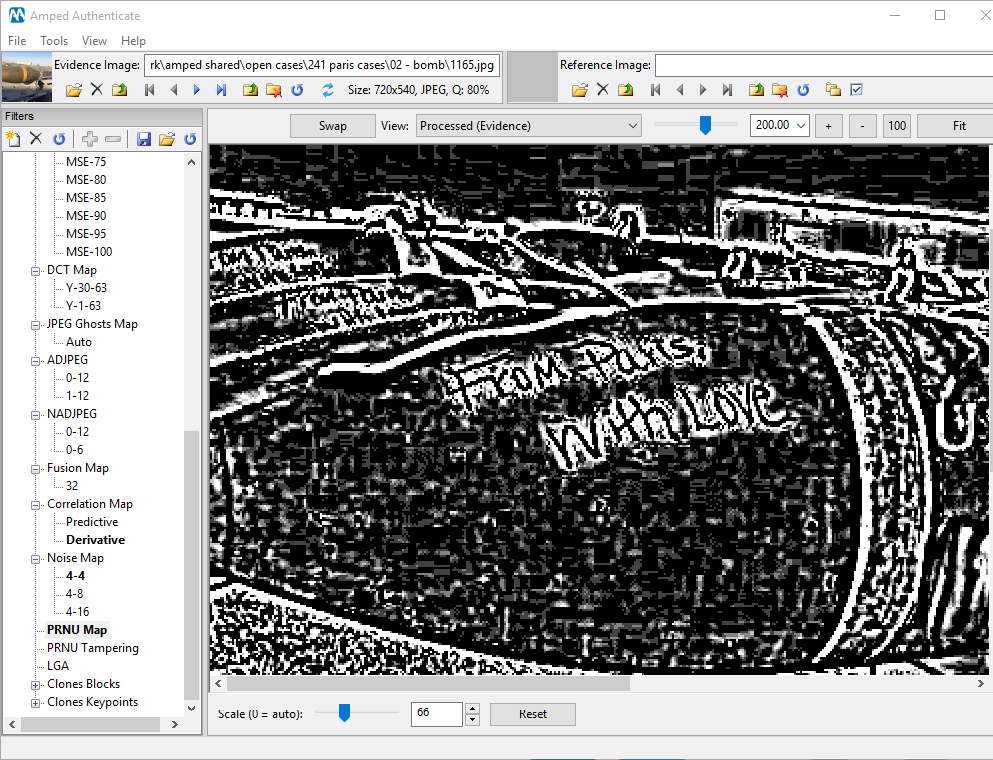

From Paris, with Love

The latest one is just from this morning. I saw this on the TV news while having breakfast. The attribution is associated to the French announcement of the bombing of Raqqa, as thus the picture should portrait bombs written with “From Paris, with Love”.

The problem is that, it looks like the original source was the Parody Facebook Page “U.S Army W.T.F.! moments”. Well, not exactly the most reliable source. If you look on the Internet for other versions, they all have the watermark (not very visible, but at the center) of the USAWTF Page (enhanced version below).

The analysis of the pixel statistic features here is not very relevant.

Some of the filters in Authenticate show some strange artifacts around the text in the form of a white halo, but given the small size of the photo and the very visible blockiness caused by image compression, there is a good chance that this effect has been caused by the JPEG algorithm.

Authenticating pictures from social media with analytical techniques is very challenging, because they have been generally subject to many different recompressions, resizing and post-processing, cutting out all the traces of manipulation. In these cases, an accurate analysis of the informative content is the only thing you can do, unless there are the elements to analyze the coherence of perspective, light and shadows. If you want to learn more about the difficulties of social media images authentication, I suggest to read this excellent post from the Reveal Project website. I cite here some of the most relevant parts:

[…] Essentially, the investigative scenario we are dealing with is the one depicted in Figure 3: we know that, for every forgery, an original (first-posted) forgery once existed and may still exist out there, but what we have to work with is a multitude of images mediated by various Web and social media platforms, which may or may not have destroyed all useful traces. […]

[…] Whether the results of our evaluation were hopeful or disappointing is an issue of interpretation: on the one hand, 47 out of the 82 cases evaded all detection – and allowing for the possibility of overestimation, the true detection capabilities of today’s state-of-the-art could be even below that level. What is worse, if we exclude the three simplest cases, out of the 8,580 remaining files only 333 were successfully detected. That means: by picking a random forged image from the dataset, we roughly have a 3% chance of a successful analysis.

Not all is bleak, however: our investigation showed how, in about half of the investigated cases, some even 10 years old, a few images exist out there that are so close to the original forgery that they can be successfully analyzed using today’s state-of-the-art. Figure 7 gives a few such examples of successful analyses. […]

The bottom line

In these days of tragic events, it is amazing how much misinformation is coming from different sources. Both professional media outlets and normal people are racing to be the first on the facts, often at the expense of accuracy and reliability of the reports. In a very short time, we have seen such a flood of wrong statements, manipulated pictures and erroneous attributions. Until some time ago we followed the credo “seeing is believing”, but I bet that shortly there will be a transition to the opposite effect. People will start to have lack of trust in the media, and the default reaction to a strong picture will be “this is fake”, even when it’s not. Everybody knows that nowadays basically all the fashion and advertisement photography is heavily retouched. There is a motivated risk that tomorrow people will take for granted that every picture released by news outlets are fake or misattributed to convey a stronger, but wrong message. Is misinformation the future of information?